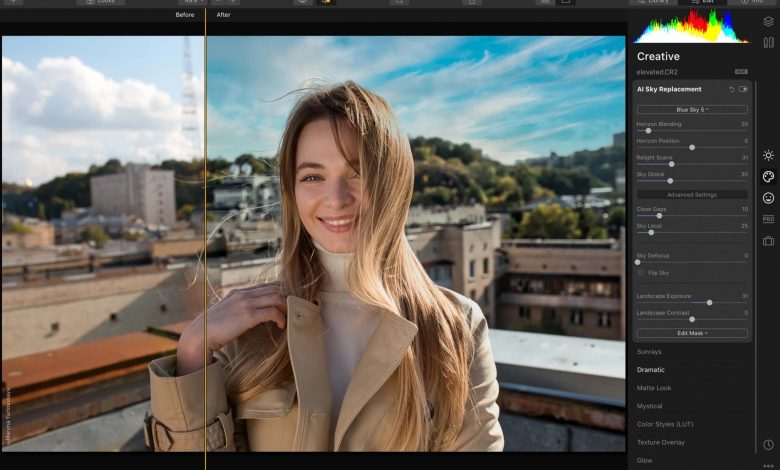

Five Ways To Enhance An Image Using Artificial Intelligence

Edge detection, or edge extraction, is a fundamental technique for separating objects in an image. It includes mathematical methods to identify points in a digital image where brightness changes. It can also detect edges, such as those that appear between objects or faces. Eventually, the process can improve the appearance of images and improve their quality.

Segmentation

AI image enhancer is making strides in the field of image segmentation. Recent developments in this area have accelerated the development of real-world applications such as cancer detection from X-ray images, autonomous driving, and more. But, first, let’s take a closer look at the most popular image segmentation methods.

Semantic and instance segmentation are two standard methods of image segmentation. Semantic segmentation assigns each pixel in an image to a specific class; for example, segmentation separates objects of the same type based on their appearance and texture. Generally, semantic segmentation is faster than instance segmentation. The latter method combines AI and machine learning techniques to achieve the highest level of accuracy. Instance segmentation can be applied to images with overlapping regions, such as crowds.

Edge extraction

Edge extraction is critical in image enhancement, but it’s often impossible to extract ideal edges from actual images of moderate complexity. To do this, we use techniques such as Hysteresis Thresholding and Non-Maximum Suppression. Hysteresis identifies the advantages that are weak and non-relevant and uses these edges to create the desired boundaries. However, a hysteresis mechanism is also necessary to distinguish between strong and weak pixels.

One of the most effective edge detection techniques is a k-nearest neighbor. This technique identifies weak detail within a region by comparing its brightness and contrast. It then applies machine learning to build a new model using information from each sample. This process is then repeated until no more merging is possible. The results are impressive and demonstrate the value of this method. Further, edge detection can be achieved with very little training.

Edge detection

AI-based image analysis uses machine learning techniques to detect edges in various images. The algorithms involved are highly robust and fast, and they can see advantages even between pixels of the same brightness. They also consider the difference in color from neighboring pixels, which enables them to detect edges when a threshold is exceeded. T

A multi-stage algorithm is called the Canny edge detector. However, this method is not very practical for images with varying lighting conditions. Holistically Nested Edge Detection requires deep end-to-end learning and can capture the edges of objects more accurately than the Canny edge detector. Therefore, holistically nested Edge Detection is better for unknown and uncontrolled environments.

Compression

When creating a digital image, it is common to apply various techniques to enhance the image. These techniques improve the image quality and display quality and prepare it for use in computer vision applications. They usually involve several transformations that change the image in various ways. For example, they can improve low-light pictures or fix optical distortions. But there’s more to enhancing images than just making them look good.

One classical technique, histogram equalization, is a simple technique that focuses on enhancing image contrast without performing any actual illumination. As a result, it is prone to generating under or over-enhanced images. Moreover, this method fails to preserve the image’s visual quality since it doesn’t consider the neighborhood. The suggested method employs variational approaches and regularization terms in the histogram to solve these flaws.

Morphological processing

The term “morphology” describes a set of image processing operations based on shapes. In a nutshell, morphological operations take an input image and apply a structuring element to it, creating an output image of the same size and shape. Pixel values are then computed based on the distances from each pixel to its neighbors in the input image. This process is also known as a shape-based classification.

There are two standard methods for the morphological processing of images. The first involves adding pixels to the boundaries of objects in a snap. The second is called erosion, which is used to remove pixels from the edge of an object. This technique also works on grayscale images. It takes into account the numerical values of pixels. Unlike standard image processing, however, it works with grayscale images. In morphological image processing, the algorithm uses structuring elements to analyze images. These elements are small matrices with a range of 0 or 1 values. The next step in morphological image processing is erosion, which removes pixels from object boundaries.

For more valuable information visit this website